The free-energy FUQ (Frequently Unasked Questions)

A site where free-energy and related concepts are explored using the mnemonic medium developed by Orbit.

All models are wrong, some are illuminating and/or useful

...cunningly chosen parsimonious models often do provide remarkably useful approximations. For example, the law PV = RT relating pressure P, volume V and temperature T of an "ideal" gas via a constant R is not exactly true for any real gas, but it frequently provides a useful approximation and furthermore its structure is informative since it springs from a physical view of the behavior of gas molecules. For such a model there is no need to ask the question "Is the model true?". If "truth" is to be the "whole truth" the answer must be "No". The only question of interest is "Is the model illuminating and useful?".

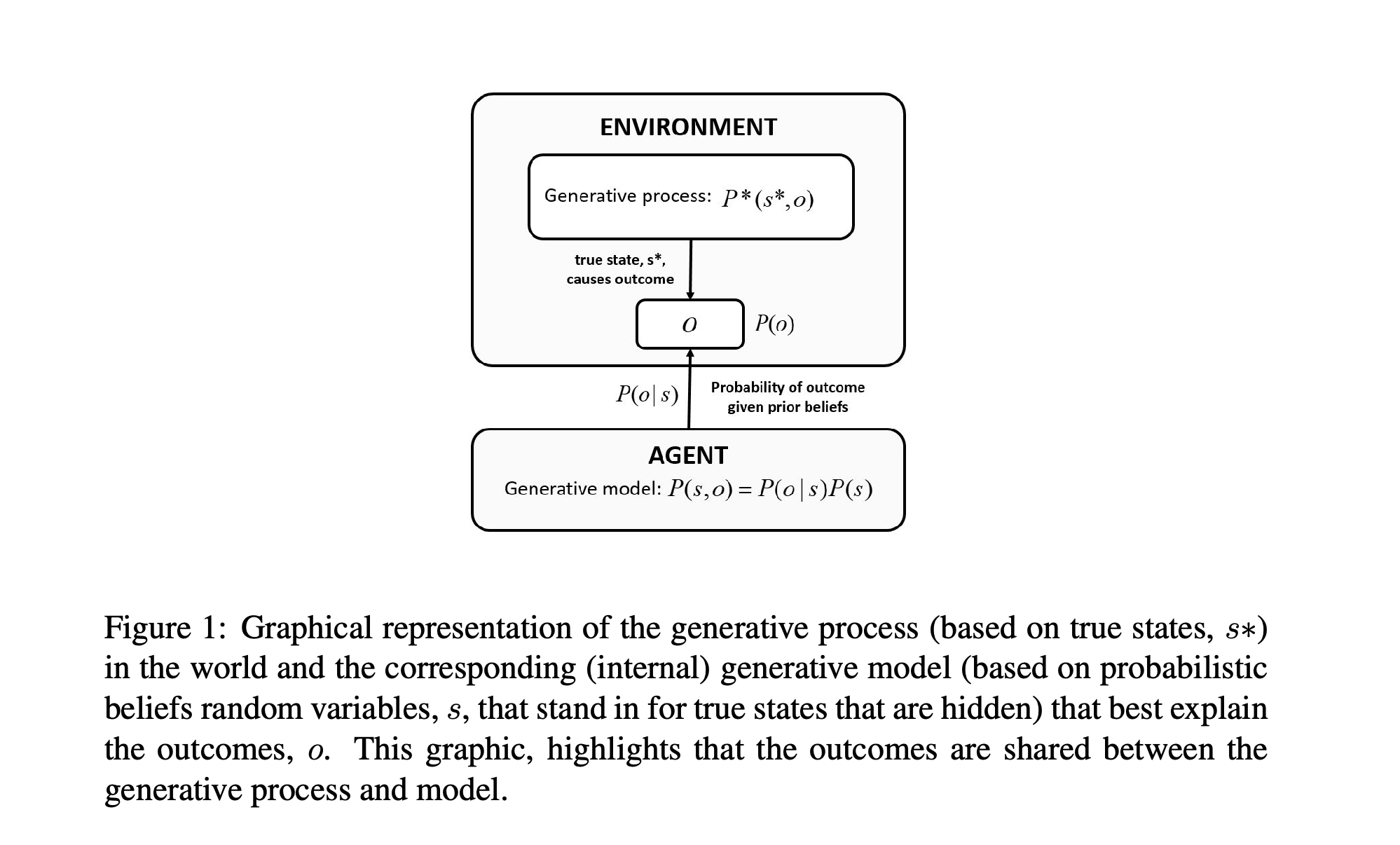

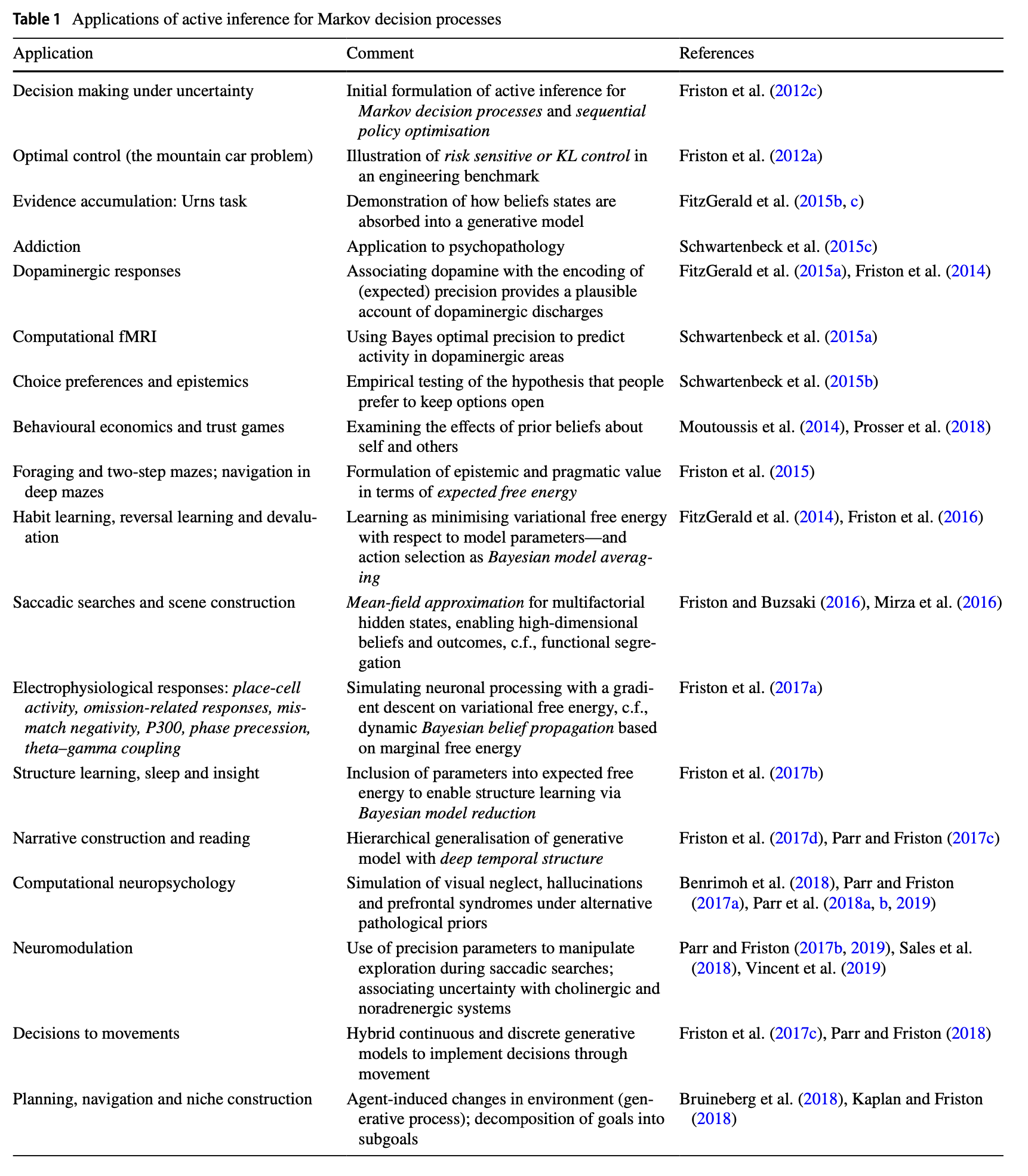

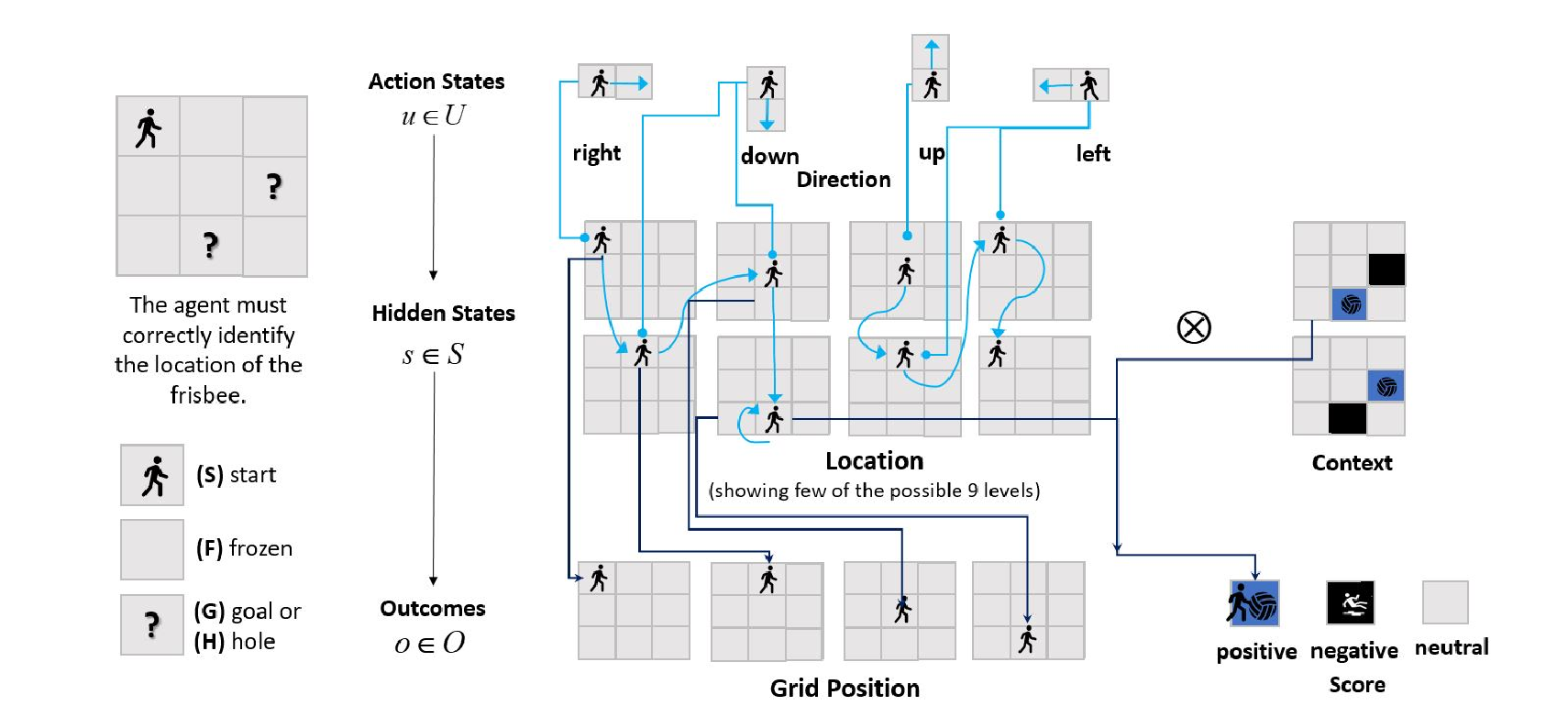

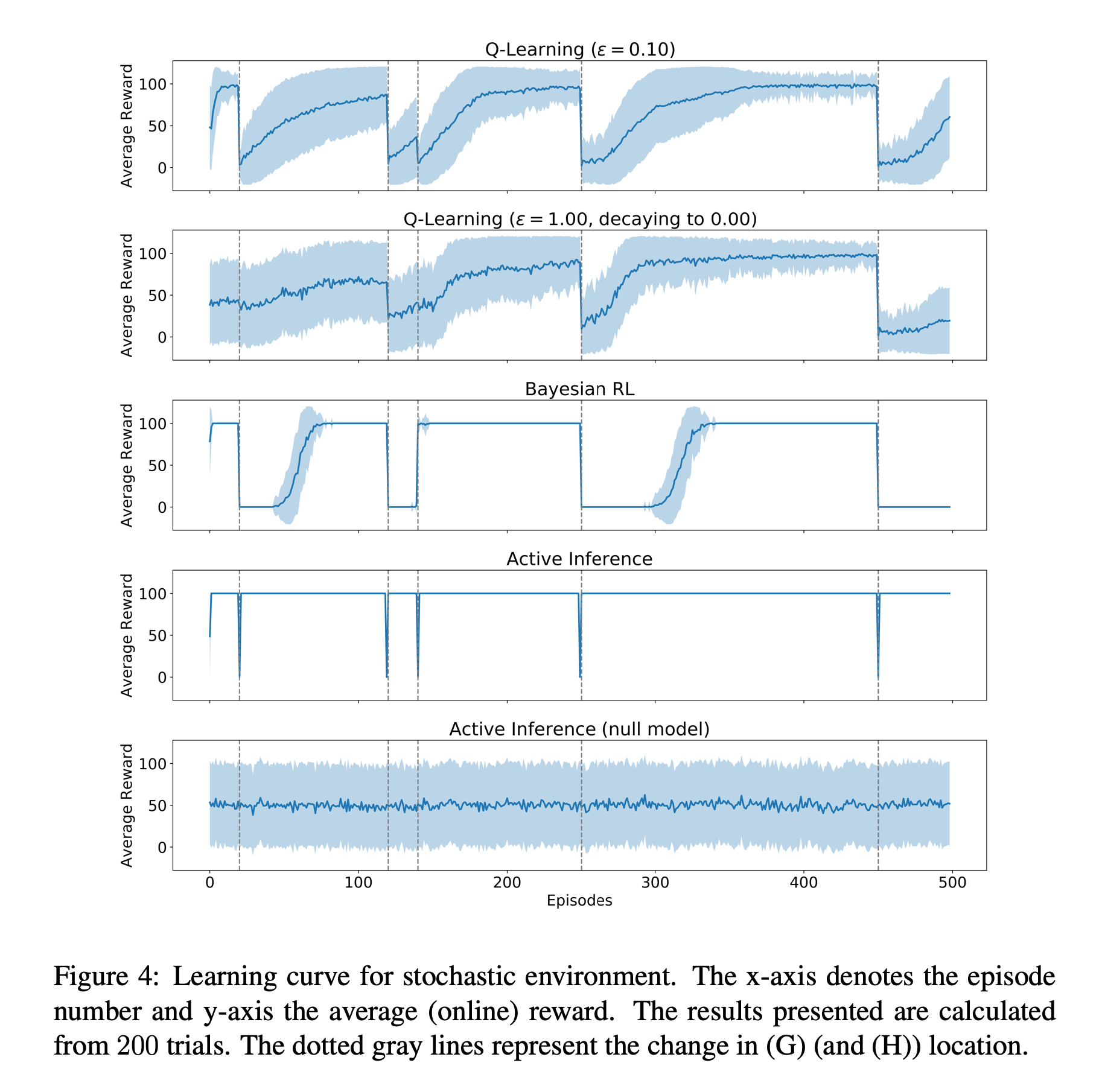

The graphic is from Active inference: demystified and compared, Neural Computation , 2021

The graphic is from Active inference: demystified and compared, Neural Computation , 2021

- it connects the concept of free energy as used in information theory with concepts used in statistical thermodynamics.

- it shows that the free energy can be evaluated by an agent because the energy is the surprise about the joint occurrence of sensations and their perceived causes, whereas the entropy is simply that of the agent’s own recognition density.

- it shows that free energy rests on a generative model of the world, which is expressed in terms of the probability of a sensation and its causes occurring together. This means that an agent must have an implicit generative model of how causes conspire to produce sensory data. It is this model that defines both the nature of the agent and the quality of the free-energy bound on surprise.

Generalised free energy and active inference, Biological Cybernetics, 2019

Generalised free energy and active inference, Biological Cybernetics, 2019

Git Repo for the paper Active inference: demystified and compared, Neural Computation , 2021

Git Repo for the paper Active inference: demystified and compared, Neural Computation , 2021

References